Stability and Performance Analysis of Control Systems Subject to Bursts of Deadline Misses

Computation is becoming cheaper and cheaper, with a lot of dedicated core capacity. Because of this, we are plugging in components in our control systems, like anomaly detector and predictive maintenance algorithms, that can help us take better advantage of the computational power for something useful. However, this additional load may come at the cost of problems and bugs, that manifest themselves as deadline misses. The controller that should regulate the plant does not manage to compute a fresh control signal on time.

The paper “Stability and Performance Analysis of Control Systems Subject to Bursts of Deadline Misses“, that received the best paper award at ECRTS 2021 sets off to try to answer the question: should we worry about this? Control systems are designed to be robust to a large set of disturbances, ranging from noise to unmodelled dynamics. Are computational delays and faults a problem?

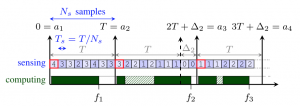

Recent work on the weakly hard model – applied to controllers – has shown that control tasks can also be inherently robust to deadline misses. However, existing exact analyses are limited to the stability of the closed-loop system. In the paper, we show that stability is important but cannot be the only factor to determine whether the behaviour of a system is acceptable also under deadline misses. We focus on systems that experience bursts of deadline misses and on their recovery to normal operation. We apply the resulting comprehensive analysis (that includes both stability and performance) to a Furuta pendulum, comparing simulated data and data obtained with the real plant.

We further evaluate our analysis using a benchmark set composed of 133 systems, which is considered representative of industrial control plants. Our results show the handling of the control signal is an extremely important factor in the performance degradation that the controller experiences, a clear indication that only a stability test does not give enough indication about the robustness to deadline misses.

When a controller is already in production phase, only small modifications will be allowed (changing some constants here and there)but this can potentially go a long way to enforce some robustness. In the paper we describe one of such small modifications to an existing control architecture and implementation and show that using the knowledge of past misses can improve the controller performance.

When a controller is already in production phase, only small modifications will be allowed (changing some constants here and there)but this can potentially go a long way to enforce some robustness. In the paper we describe one of such small modifications to an existing control architecture and implementation and show that using the knowledge of past misses can improve the controller performance.